AB Experiments (Satori)

Run AB Experiments with Satori, the LiveOps solution from HeroicLabs.

Introduction

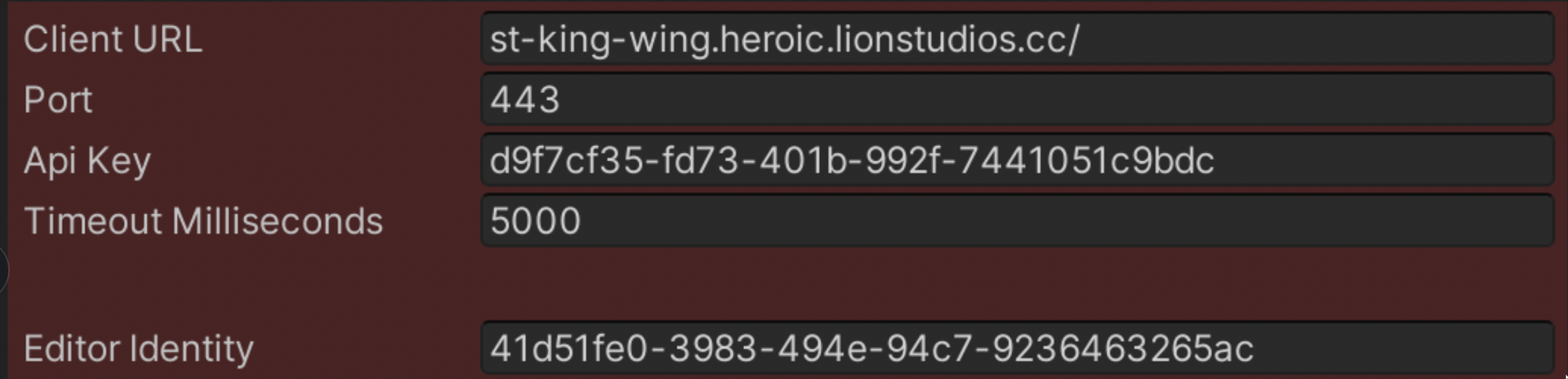

AB Experiments are set up on the Satori Dashboard. Ensure your Satori instance is set up and you can access your game dashboard.

This document provides instructions for setting up the Example AB Test use case described below.

Satori’s terminology differs from other tools, such as Firebase. Below you can find how the corresponding pairs of Firebase terms in Satori:

Firebase vs. Satori:

———————————

Remote Config → Feature Flag

Experiment Cohort → Experiment Variant

Note: Looker also uses Experiment Cohort to refer to Experiment Variant

Requirements

- Make sure that you have set up Remote Configuration as experiments depend on remote configurations following the guide here: Remote Configuration

- Design your experiment - what are your hypotheses, goals, and game levers? Please see our Experiment Best Practices.

- LionSDK will automatically fire the

ab_cohortevent to LionAnalytics, allowing us to analyze the experiment results.- Every experiment needs a unique name, and we need a special (unique for the experiment) feature flag that tracks which variant the player has been assigned for that experiment. LionSDK will automatically check for a feature flag with the following naming convention and use that to fire the required

ab_cohortevent for you.- “

exp_[EXPERIMENT NAME]" (replace[EXPERIMENT NAME]with your unique name)

- “

- Ensure you have all necessary events firing in your game that you will use for analysis. See our Analytics: 1. Planning guide for more information.

- Every experiment needs a unique name, and we need a special (unique for the experiment) feature flag that tracks which variant the player has been assigned for that experiment. LionSDK will automatically check for a feature flag with the following naming convention and use that to fire the required

- Follow Satori’s conceptual documentation here to understand the concept of experiments and experiment phases. If you need to change the current experiment configurations, stop the current phase, fix and start a new one.

- See our example below to understand better how to set up your Experiment correctly.

- Analyze Results - Once your experiment is complete, please see the Dashboards & Analysis section here: Dashboard & Analysis

Example Experiment (AB Test) Use Case

-

The Product team wants to set up an experiment to optimize the level at which interstitials start showing in the game.

-

For that purpose each experiment variant/cohort will receive a different value for the

interstitialMinLevelFeature Flag (”FF”), allowing the experimenter to treat users differently by giving them differentinterstitialMinLevelvalues -

There will be three experiment variants:

“passive”-“aggressive”-“control”. -

Users will be split equally into the experiment variants

Variant Allocation interstitialMinLevel Description Passive33% of players 5 Users will receive interstitials after level 5 Aggressive33% of players 0 Users will receive interstitials after level 0 Control33% of players 3 Users will receive interstitials after level 3 -

The experiment will be concluded, and existing participants will be grandfathered. This means that they will continue to receive their existing treatments, while no new users will be added to the experiment.

Example Dashboard & Client Setup

-

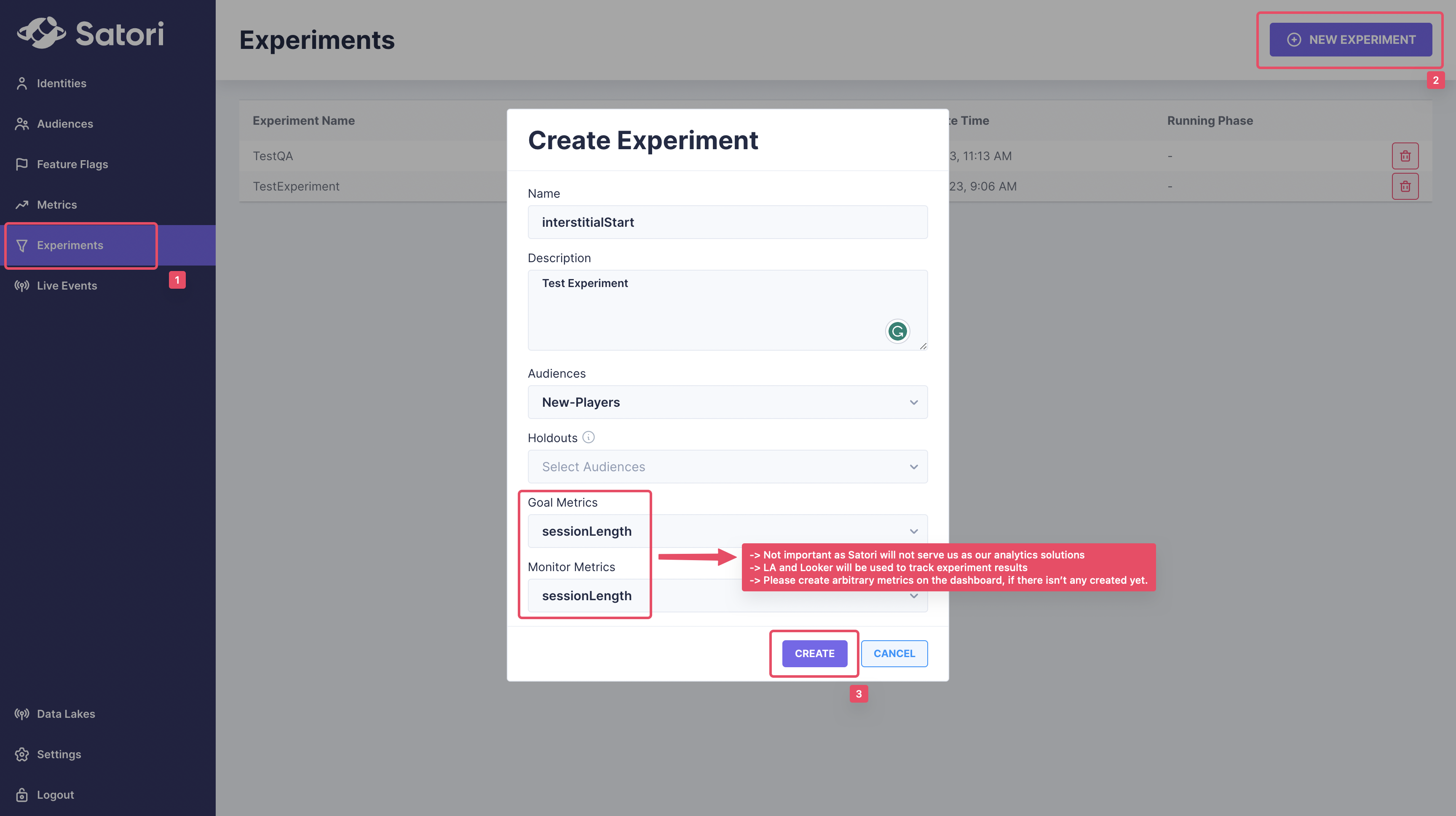

Create an

ExperimentcalledinterstitialStartfollowing the instructions provided here

👉

Goal & Monitoring Metrics: The user is required to select a metric; however, you can choose any arbitrary metric. Satori uses metrics to display the experiment results on the Satori Dashboard. However, Lion will not use the Satori Dashboard for analytics purposes and will instead display the results using the Analytics package on Looker.

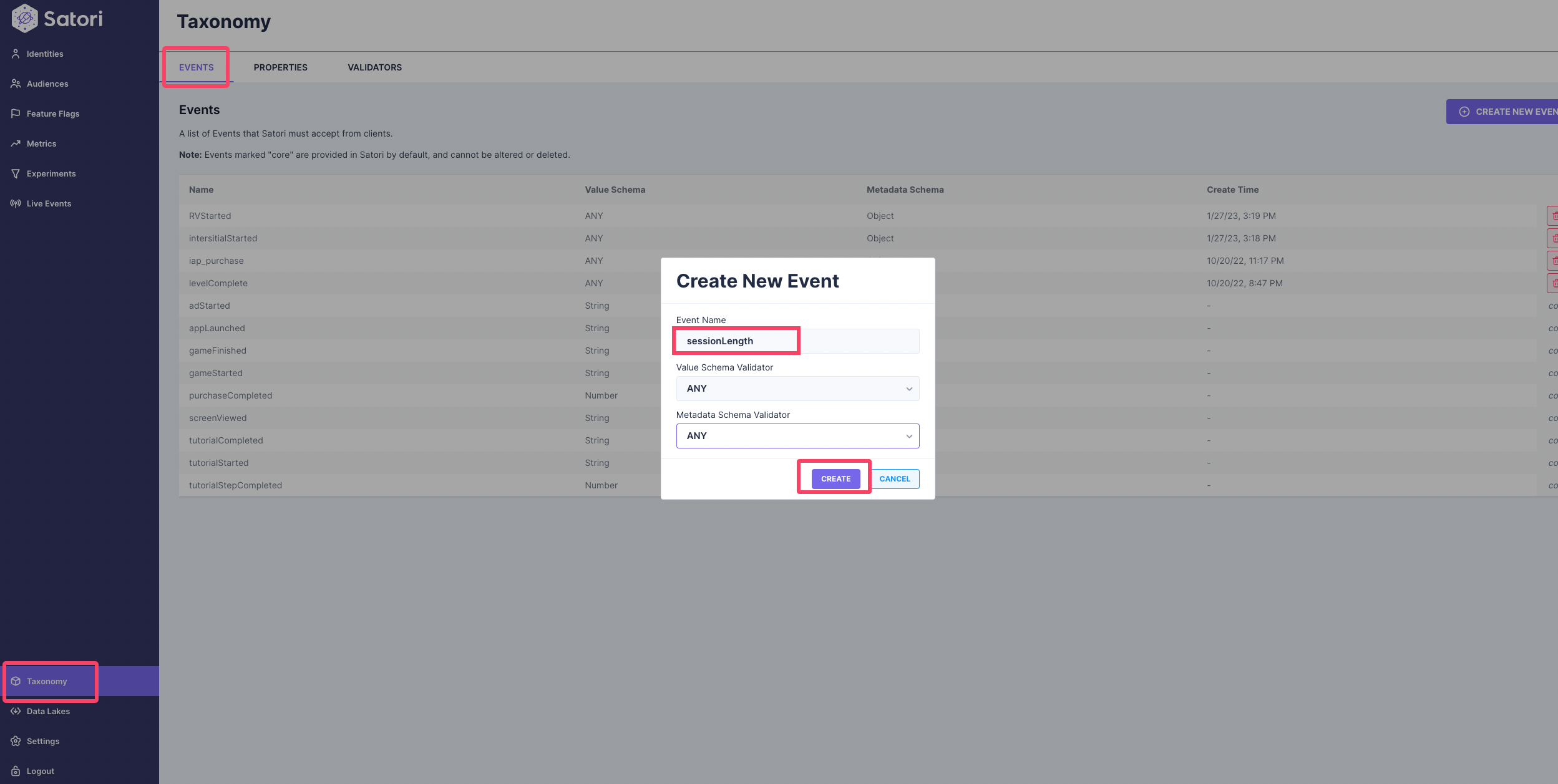

How do you create a metric if there isn’t any metric setup?

-

On the Satori dashboard, create a main feature flag for your experiment named

exp_interstitialStart- The default value of the

exp_interstitialStartFF should becontrol. - Ensure that the experiment’s name, which we will set up later in step 3 below replaces

[EXPERIMENT NAME]of theexp_[EXPERIMENT NAME]-

This feature flag is created to pass the experiment and experiment variant name to the Analytics package

ab_cohortevent. This FF does not need to be implemented in the game.

-

- The default value of the

-

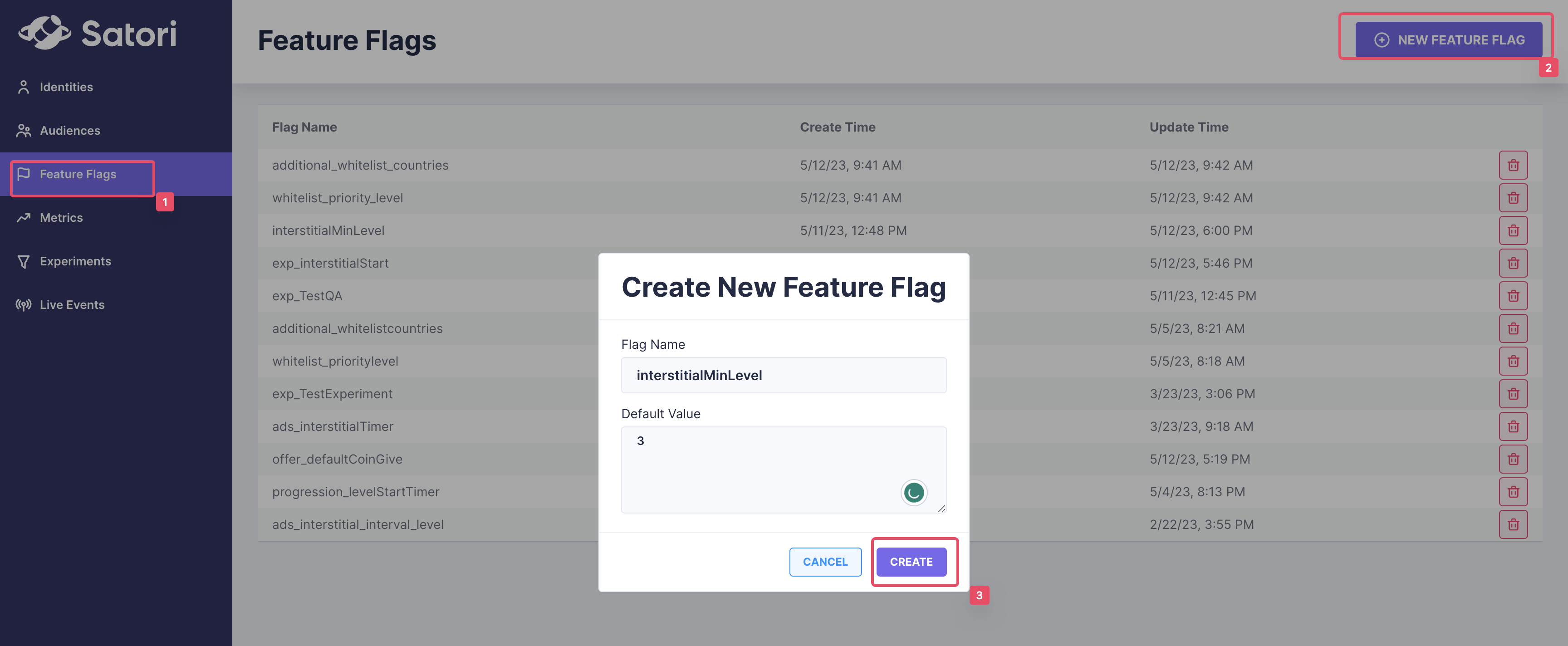

On the Satori dashboard, now create one or more Feature Flag(s) for the variables you want to test

- Example

-

Create a feature flag named

interstitialMinLevelon the Satori dashboard with a default value3

- The default value of the

exp_interstitialStartFF should becontrol.

- The default value of the

-

- Feature Flag values accept

string,integer,float, andboolean.- For

string, please pass the values in the following format →dummy - For

integer, please pass the values in the following format →0 - For

float, please pass the values in the following format →1.5 - For

boolean, please pass the values in the following format →true|false - This second feature flag is created to pass the experiment and experiment variant name to the Analytics

ab_cohortevent. This FF does not need to be implemented in the game.

- For

- Example

-

Please let your developer team know that they will be using the value of the

interstitialMinLevelfeature flag in the client following the instructions found in Remote Configuration.- Add FeatureFlags to the experiment.

- Users participating in the experiment will receive values from these FFs that are overridden for each experiment variant

- Add the FeatureFlags we created in Step 2 & 3:

-

interstitialMinLevel: FF will be used to treat users differently by defining the different minimum levels at which interstitials will appear -

exp_interstitialStart: FF value be used to inform the client about the experiment variant name of the user. The LiveOps package will automatically fire the correctab_cohortevent and parameters to our backend.

-

- Add FeatureFlags to the experiment.

-

Create experiment variants on the Satori dashboard

-

Go to

Experiments→Variants(aka buckets) (in the upper navigation tab) -

Create three variants and set the FF values for each variant as provided in the image

-

Note that you must update the

exp_[Experiment Name]FF value with the name of your Experiment Variant.

-

-

Create a new phase to initiate the experiment, see here

- Go to

Experiments→Phases→Create New Phase - Provide configurations for the new phase

- The following parameters are at the PM’s discretion:

- Phase Name

- Description

- Start Time

- End Time

- Define the % splits

- Variants

aggressive: 33% Splitpassive: 33% Splitcontrol: 34% Split

- Variants

- The following parameters are at the PM’s discretion:

- Go to

-

End the running phase when sufficient time has passed to conclude your experiment so that new users do not join the experiment by

Experiments > Phases > Halt Phase -

Hit the “Export Variants” button. The button will do the following to ensure that existing participants will receive the same FF variants:

- Create custom audiences for each experiment variant, encapsulating the participants of each variant.

- Define FF variants to

interstitialMinLevelFeature Flag - Link each FF variant with the custom audience parallel to the treatment during the experiment.